先使用web-ui训练一个模型,数据集使用自定义的,然后训练完成后在web-ui上选中刚才训练的模型,直接点击部署,然后就报错,错误如下:

Traceback (most recent call last):

File "/opt/conda/lib/python3.10/site-packages/swift/cli/deploy.py", line 5, in

deploy_main()

File "/opt/conda/lib/python3.10/site-packages/swift/utils/run_utils.py", line 31, in x_main

result = llm_x(args, **kwargs)

File "/opt/conda/lib/python3.10/site-packages/swift/llm/deploy.py", line 514, in llm_deploy

model, template = prepare_model_template(args)

File "/opt/conda/lib/python3.10/site-packages/swift/llm/infer.py", line 206, in prepare_model_template

model = Swift.from_pretrained(

File "/opt/conda/lib/python3.10/site-packages/swift/tuners/base.py", line 972, in from_pretrained

return SwiftModel.from_pretrained(

File "/opt/conda/lib/python3.10/site-packages/swift/tuners/base.py", line 362, in from_pretrained

raise ValueError('Mixed using with peft is not allowed now.')

ValueError: Mixed using with peft is not allowed now.

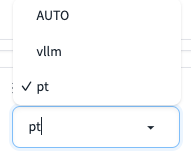

推理框架默认是pt,

改为其他两个选项,依然会报错。

部署命令如下(没有设置peft相关参数):

swift deploy --model_type qwen1half-7b-chat --template_type qwen --system You are a helpful assistant. --repetition_penalty 1.05 --ckpt_dir /mnt/workspace/output/qwen1half-7b-chat/v3-20240506-182158 --port 8000 --log_file /mnt/workspace/output/qwen1half-7b-chat-202456182431/run_deploy.log --ignore_args_error true

我也碰到了相同的问题

在swift已解决的issue里找到了问题的答案

https://github.com/modelscope/swift/issues/732

我的理解就是你没有指定具体的checkpoint

或者将checkpoint进行合并后再运行

微调后的数据是分段的,数据有问题也就不用全部删了

ModelScope旨在打造下一代开源的模型即服务共享平台,为泛AI开发者提供灵活、易用、低成本的一站式模型服务产品,让模型应用更简单!欢迎加入技术交流群:微信公众号:魔搭ModelScope社区,钉钉答疑群:44837352