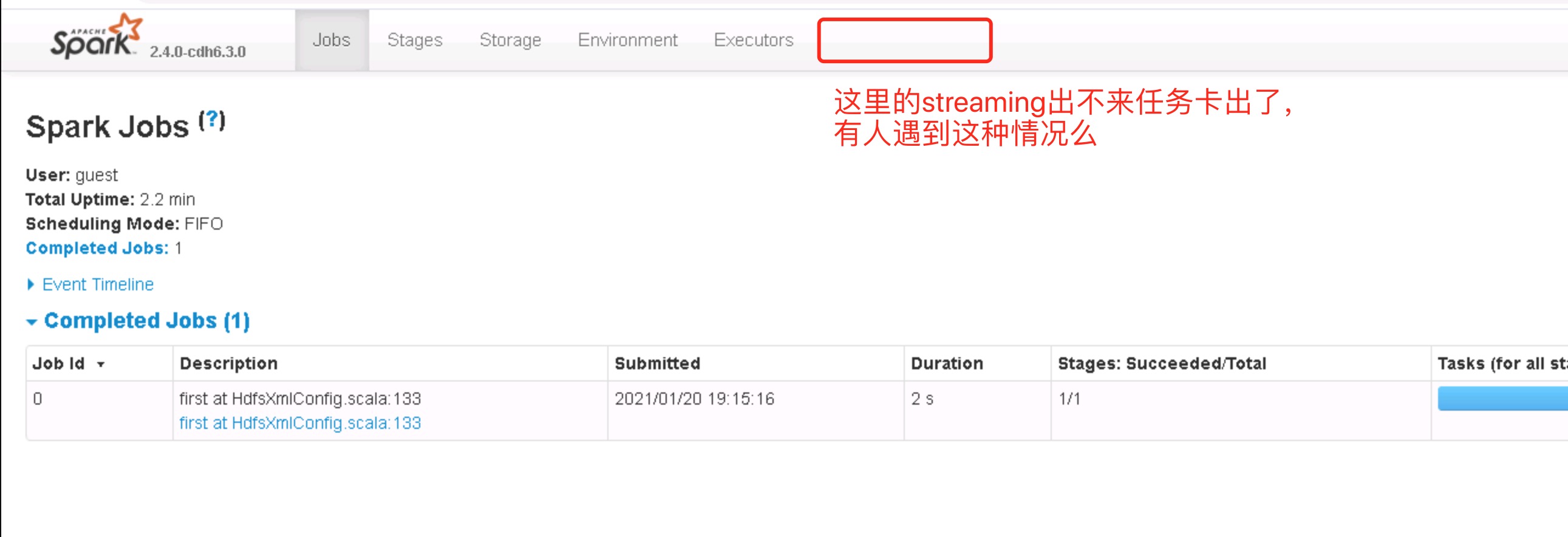

提交任务到yarn 显示running 我这个是新的groupId 在kafka上无法查询到group 再spark页面如上图所示,在yarn界面 卡住,没有任何日志打印

版权声明:本文内容由阿里云实名注册用户自发贡献,版权归原作者所有,阿里云开发者社区不拥有其著作权,亦不承担相应法律责任。具体规则请查看《阿里云开发者社区用户服务协议》和《阿里云开发者社区知识产权保护指引》。如果您发现本社区中有涉嫌抄袭的内容,填写侵权投诉表单进行举报,一经查实,本社区将立刻删除涉嫌侵权内容。

启动8个task的日志:

2016-06-18 10:16,167 | INFO | [sparkDriver-akka.actor.default-dispatcher-16] | Starting task 0.0 in stage 293749.0 (TID 1356848, scdxdsjkafka2, PROCESS_LOCAL, 1165 bytes) | org.apache.spark.Logging$class.logInfo(Logging.scala:59)

2016-06-18 10:16,167 | INFO | [sparkDriver-akka.actor.default-dispatcher-16] | Starting task 1.0 in stage 293749.0 (TID 1356849, scdxdsjkafka1, PROCESS_LOCAL, 1165 bytes) | org.apache.spark.Logging$class.logInfo(Logging.scala:59)

2016-06-18 10:16,168 | INFO | [sparkDriver-akka.actor.default-dispatcher-16] | Starting task 2.0 in stage 293749.0 (TID 1356850, scdxdsjkafka1, PROCESS_LOCAL, 1165 bytes) | org.apache.spark.Logging$class.logInfo(Logging.scala:59)

2016-06-18 10:16,169 | INFO | [sparkDriver-akka.actor.default-dispatcher-16] | Starting task 3.0 in stage 293749.0 (TID 1356851, scdxdsjkafka1, PROCESS_LOCAL, 1165 bytes) | org.apache.spark.Logging$class.logInfo(Logging.scala:59)

2016-06-18 10:16,169 | INFO | [sparkDriver-akka.actor.default-dispatcher-16] | Starting task 4.0 in stage 293749.0 (TID 1356852, scdxdsjkafka3, PROCESS_LOCAL, 1165 bytes) | org.apache.spark.Logging$class.logInfo(Logging.scala:59)

2016-06-18 10:16,169 | INFO | [sparkDriver-akka.actor.default-dispatcher-16] | Starting task 5.0 in stage 293749.0 (TID 1356853, scdxdsjkafka2, PROCESS_LOCAL, 1165 bytes) | org.apache.spark.Logging$class.logInfo(Logging.scala:59)

2016-06-18 10:16,170 | INFO | [sparkDriver-akka.actor.default-dispatcher-16] | Starting task 6.0 in stage 293749.0 (TID 1356854, scdxdsjkafka2, PROCESS_LOCAL, 1165 bytes) | org.apache.spark.Logging$class.logInfo(Logging.scala:59)

2016-06-18 10:16,170 | INFO | [sparkDriver-akka.actor.default-dispatcher-16] | Starting task 7.0 in stage 293749.0 (TID 1356855, scdxdsjkafka3, PROCESS_LOCAL, 1165 bytes) |

2、7个task任务完成(task 1356848 丢失):

org.apache.spark.Logging$class.logInfo(Logging.scala:59)

2016-06-18 10:16,468 | INFO | [task-result-getter-2] | Finished task 1.0 in stage 293749.0 (TID 1356849) in 301 ms on scdxdsjkafka1 (1/8) | org.apache.spark.Logging$class.logInfo(Logging.scala:59)

2016-06-18 10:16,472 | INFO | [task-result-getter-3] | Finished task 5.0 in stage 293749.0 (TID 1356853) in 303 ms on scdxdsjkafka2 (2/8) | org.apache.spark.Logging$class.logInfo(Logging.scala:59)

2016-06-18 10:16,474 | INFO | [task-result-getter-1] | Finished task 3.0 in stage 293749.0 (TID 1356851) in 306 ms on scdxdsjkafka1 (3/8) | org.apache.spark.Logging$class.logInfo(Logging.scala:59)

2016-06-18 10:16,480 | INFO | [task-result-getter-0] | Finished task 7.0 in stage 293749.0 (TID 1356855) in 310 ms on scdxdsjkafka3 (4/8) | org.apache.spark.Logging$class.logInfo(Logging.scala:59)

2016-06-18 10:16,485 | INFO | [task-result-getter-2] | Finished task 6.0 in stage 293749.0 (TID 1356854) in 315 ms on scdxdsjkafka2 (5/8) | org.apache.spark.Logging$class.logInfo(Logging.scala:59)

2016-06-18 10:16,494 | INFO | [task-result-getter-3] | Finished task 4.0 in stage 293749.0 (TID 1356852) in 325 ms on scdxdsjkafka3 (6/8) | org.apache.spark.Logging$class.logInfo(Logging.scala:59)

2016-06-18 10:16,511 | INFO | [task-result-getter-1] | Finished task 2.0 in stage 293749.0 (TID 1356850) in 343 ms on scdxdsjkafka1 (7/8) | org.apache.spark.Logging$class.logInfo(Logging.scala:59)

2016-06-18 10:16,027 | INFO | [JobGenerator] | Added jobs for time 1466216220000 ms | org.apache.spark.Logging$class.logInfo(Logging.scala:59)

2016-06-18 10:16,035 | INFO | [JobGenerator] | Added jobs for time 1466216230000 ms | org.apache.spark.Logging$class.logInfo(Logging.scala:59)

问题出现时的截图(一个task阻塞,阻塞6个小时):

20161017113556297001.png

Task统计信息中缺少一个task:

http://hi3ms-image.huawei.com/hi/showimage-1430645815-408595-88bbc1a0e3abcd228e1a3bb4431202ce.jpg

解决方法

1、 采取推测机制: 在spark-default.conf 中添加:spark.speculation true 配置关于推测机制的三个参数如下:

l spark.speculation.interval 100:检测周期,单位毫秒;

l spark.speculation.quantile 0.75:完成task的百分比时启动推测;

l spark.speculation.multiplier 1.5:比其他的慢多少倍时启动推测。

2、设置 spark.streaming.concurrentJobs 4

开启推测机制后,如果集群中,某一台机器的几个task特别慢,推测机制会将任务分配到其他机器执行,最后Spark会选取最快的作为最终结果。

spark.streaming.concurrentJobs可以控制job并发度,默认是1,某一个Job卡死之后,会影响后续Job的执行。现在将其设置为4,如果某个Job卡死,不会影响后续Job的执行。

技术角度分析,设置上述两个参数之后,可以规避某个Task挂死的问题。因为此问题,现网也是运行3-4天才出现的随机问题,无法立即复现验证,目前只能采取这种规避方案。

阿里云EMR是云原生开源大数据平台,为客户提供简单易集成的Hadoop、Hive、Spark、Flink、Presto、ClickHouse、StarRocks、Delta、Hudi等开源大数据计算和存储引擎,计算资源可以根据业务的需要调整。EMR可以部署在阿里云公有云的ECS和ACK平台。