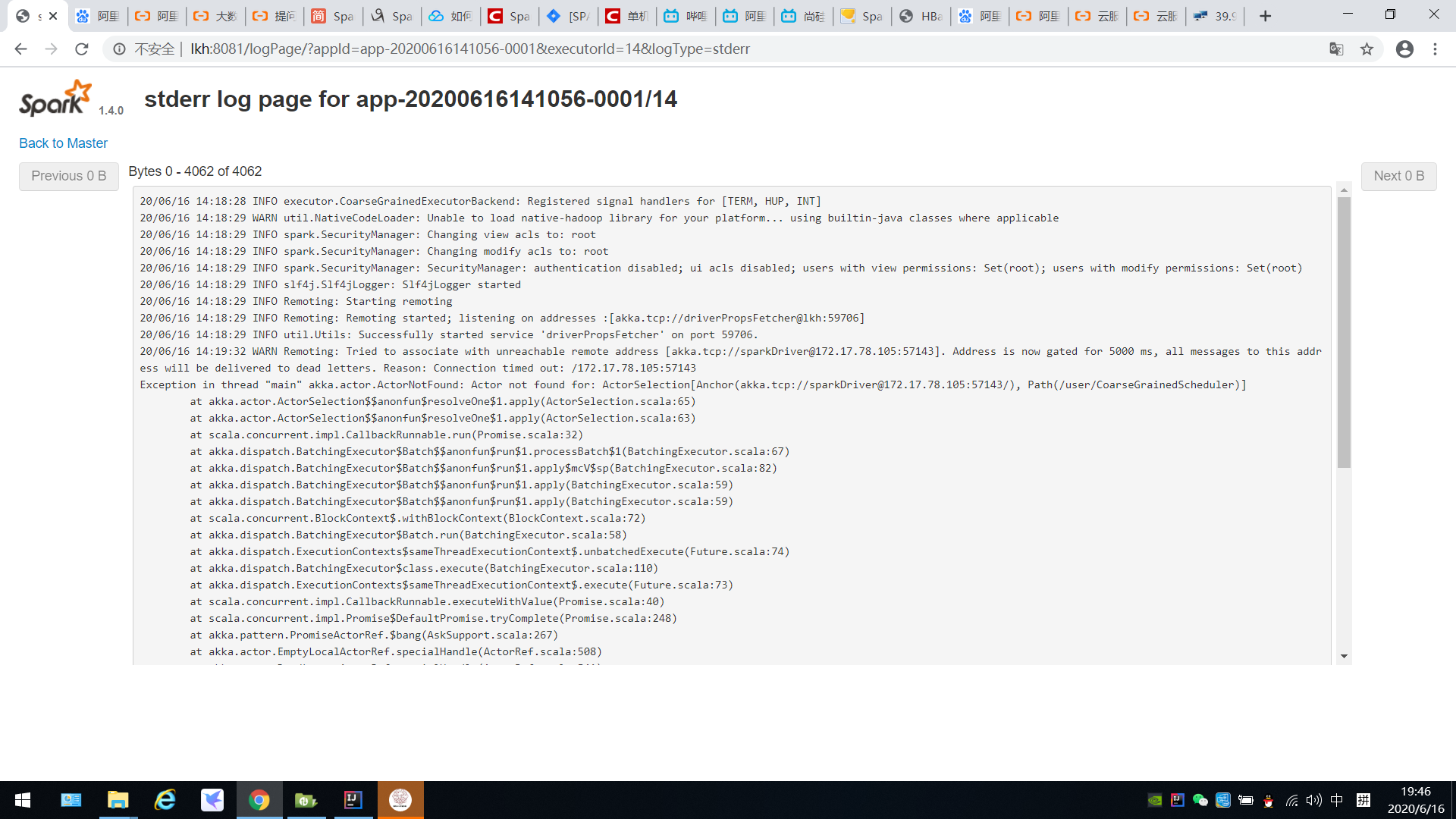

在阿里云上提交spark运行之后  出现如下报错,说是sparkdriver找不到后面跟的是该主机的私网ip请问这是怎么回事

出现如下报错,说是sparkdriver找不到后面跟的是该主机的私网ip请问这是怎么回事

版权声明:本文内容由阿里云实名注册用户自发贡献,版权归原作者所有,阿里云开发者社区不拥有其著作权,亦不承担相应法律责任。具体规则请查看《阿里云开发者社区用户服务协议》和《阿里云开发者社区知识产权保护指引》。如果您发现本社区中有涉嫌抄袭的内容,填写侵权投诉表单进行举报,一经查实,本社区将立刻删除涉嫌侵权内容。

特别坑的地方:

在/etc/hosts文件:

127.0.0.1 localhost

你的内网ip 你的主机名

对应的spark-env.sh文件

export SPARK_LOCAL_IP=你的主机名

export SPARK_MASTER_HOST=你的主机名

对应的slaves文件

你的主机名

用公网ip就报错

17/07/24 21:16:03 WARN Utils: Service 'sparkMaster' could not bind on port 7077. Attempting port 7078.

17/07/24 21:16:03 WARN Utils: Service 'sparkMaster' could not bind on port 7078. Attempting port 7079.

17/07/24 21:16:03 WARN Utils: Service 'sparkMaster' could not bind on port 7079. Attempting port 7080.

17/07/24 21:16:03 WARN Utils: Service 'sparkMaster' could not bind on port 7080. Attempting port 7081.

17/07/24 21:16:03 WARN Utils: Service 'sparkMaster' could not bind on port 7081. Attempting port 7082.

17/07/24 21:16:03 WARN Utils: Service 'sparkMaster' could not bind on port 7082. Attempting port 7083.

17/07/24 21:16:03 WARN Utils: Service 'sparkMaster' could not bind on port 7083. Attempting port 7084.

17/07/24 21:16:03 WARN Utils: Service 'sparkMaster' could not bind on port 7084. Attempting port 7085.

17/07/24 21:16:03 WARN Utils: Service 'sparkMaster' could not bind on port 7085. Attempting port 7086.

17/07/24 21:16:03 WARN Utils: Service 'sparkMaster' could not bind on port 7086. Attempting port 7087.

17/07/24 21:16:03 WARN Utils: Service 'sparkMaster' could not bind on port 7087. Attempting port 7088.

17/07/24 21:16:03 WARN Utils: Service 'sparkMaster' could not bind on port 7088. Attempting port 7089.

17/07/24 21:16:03 WARN Utils: Service 'sparkMaster' could not bind on port 7089. Attempting port 7090.

17/07/24 21:16:03 WARN Utils: Service 'sparkMaster' could not bind on port 7090. Attempting port 7091.

17/07/24 21:16:03 WARN Utils: Service 'sparkMaster' could not bind on port 7091. Attempting port 7092.

17/07/24 21:16:03 WARN Utils: Service 'sparkMaster' could not bind on port 7092. Attempting port 7093.

Exception in thread "main" java.net.BindException: Cannot assign requested address: Service 'sparkMaster' failed after 16 retries (starting from 7077)! Consider explicitly setting the appropriate port for the service 'sparkMaster' (for example spark.ui.port for SparkUI) to an available port or increasing spark.port.maxRetries.

2. spark2.1.1版本的spark居然不能spark-shell到spark-2.2.0版本, 版本居然步兼容, 一言不合就报错:

org.apache.spark.SparkException: Exception thrown in awaitResult

我的服务器的spark的版本是2.2.0的

我的电脑上的spark是2.1.1的

坑了我一晚上