hadoop2.3 eclipse提交任务到集群上 找不到input?报错

问题描述:想在window环境下通过eclipse将统计字数job提交给虚拟机上的集群,但是异常,请各位指点迷津

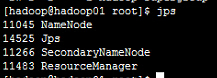

问题截图:集群是正常的,如图:

(这是在管理节点上的进程,datanode在另外两台机器上,也是正常的)

hdfs input文件夹也是正常的,如图:

在eclipse上执行job 报错信息:

2016-01-10 13:36:15,643 WARN [main] security.UserGroupInformation (UserGroupInformation.java:doAs(1551)) - PriviledgedActionException as:hadoop (auth:SIMPLE) cause:org.apache.hadoop.mapreduce.lib.input.InvalidInputException: Input path does not exist: hdfs://192.168.88.128:9000/hadoop/input Exception in thread "main" org.apache.hadoop.mapreduce.lib.input.InvalidInputException: Input path does not exist: hdfs://192.168.88.128:9000/hadoop/input at org.apache.hadoop.mapreduce.lib.input.FileInputFormat.listStatus(FileInputFormat.java:285) at org.apache.hadoop.mapreduce.lib.input.FileInputFormat.getSplits(FileInputFormat.java:340) at org.apache.hadoop.mapreduce.JobSubmitter.writeNewSplits(JobSubmitter.java:493) at org.apache.hadoop.mapreduce.JobSubmitter.writeSplits(JobSubmitter.java:510) at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:394) at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1295) at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1292) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:415) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1548) at org.apache.hadoop.mapreduce.Job.submit(Job.java:1292) at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1313) at com.battier.hadoop.test.WordCount.main(WordCount.java:145)

HDFS也是可以正常访问的:

执行的字数统计代码:

/**

* 连接hadoop集群配置

*/

conf.set("dfs.permissions","false");

//conf.set("fs.default.name", "hdfs://192.168.88.128:9000");

conf.set("fs.defaultFS", "hdfs://hadoop01:9000");

conf.set("hadoop.job.user", "hadoop");

conf.set("mapreduce.framework.name", "yarn");

conf.set("mapreduce.jobtracker.address", "192.168.88.128:9001");

conf.set("yarn.resourcemanager.hostname", "192.168.88.128");

conf.set("yarn.resourcemanager.admin.address", "192.168.88.128:8033");

conf.set("yarn.resourcemanager.address", "192.168.88.128:8032");

conf.set("yarn.resourcemanager.resource-tracker.address", "192.168.88.128:8031");

conf.set("yarn.resourcemanager.scheduler.address", "192.168.88.128:8030");

String[] otherArgs = new String[2];

otherArgs[0] = "hdfs://hadoop01:9000/hadoop/input";//计算原文件目录,需提前在里面存入文件

String time = new SimpleDateFormat("yyyyMMddHHmmss").format(new Date());

otherArgs[1] = "hdfs://hadoop01:9000/hadoop/output/" + time;//计算后的计算结果存储目录,每次程序执行的结果目录不能相同,所以添加时间标签

展开

收起

1

条回答

写回答

写回答

-

https://developer.aliyun.com/profile/5yerqm5bn5yqg?spm=a2c6h.12873639.0.0.6eae304abcjaIB

帮顶,是不是

Inputpathdoesnotexist:hdfs://192.168.88.128:9000/hadoop/input

感谢!hadoop01就是192.168.88.128执行一下: hadoopfs-ls /hadoop/input 先验证一下你的路径对不对。

路径不存在,,说明,1.你在hadoop没有这个路径。2.没有那个文件;

回复 @battier:不需要,,,在你的classpath下面记得放那三个文件就可以。感谢指导!hadoop2.x的输入输出路径要加安装hadoop的用户名作为目录根目录吗?2020-06-10 15:10:25赞同 展开评论 打赏

版权声明:本文内容由阿里云实名注册用户自发贡献,版权归原作者所有,阿里云开发者社区不拥有其著作权,亦不承担相应法律责任。具体规则请查看《阿里云开发者社区用户服务协议》和《阿里云开发者社区知识产权保护指引》。如果您发现本社区中有涉嫌抄袭的内容,填写侵权投诉表单进行举报,一经查实,本社区将立刻删除涉嫌侵权内容。

相关问答