爬虫源码

def parse(self, response):

data = json.loads(response.text)['result']['data']

if data is None:

return

for str in data:

it_item = SinastarItem()

it_item['userid'] = str['_id']

it_item['name'] = str['title']

it_item['starurl'] = str['url']

it_item['pic'] = str['pic']

if str['birth_year'] != '' and str['birth_month'] != '' and str['birth_day'] != '':

it_item['birthday'] = str['birth_year'] + "/" + str['birth_month'] + "/" + str['birth_day']

else:

it_item['birthday'] = ''

it_item['xingzuo'] = str['astrology']

it_item['sex'] = str['gender']

it_item['profession'] = str['profession']

it_item['area'] = str['nationality']

it_item['height'] = str['height']

if not it_item['userid'] is None:

intro_url = 'http://ent.sina.com.cn/ku/star_detail_index.d.html?type=intro&id=' + it_item['userid']

base_url = 'http://ent.sina.com.cn/ku/star_detail_index.d.html?type=base&id=' + it_item['userid']

photo_url = 'http://ent.sina.com.cn/ku/star_detail_index.d.html?type=photo&id=' + it_item['userid']

yield scrapy.Request(intro_url,callback=self.info_item,meta={'item':it_item,'type':'intro'})

yield scrapy.Request(base_url, callback=self.info_item, meta={'item': it_item,'type':'base'})

yield scrapy.Request(photo_url, callback=self.photo_item, meta={'item': it_item})

#写真

def photo_item(self,response):

item = response.meta['item']

photoji = response.xpath("//*[@id='waterfall_roles']/li/a/img/@src").extract() ##取出写真集

plen = len(photoji)

if plen is not None and plen > 10:

imgurl = random.sample(photoji, 10) ##随机取list 10个数

item['imgurl'] = ','.join(imgurl)

else:

if photoji is not None:

item['imgurl'] = ','.join(photoji)

else:

item['imgurl'] = photoji

return item

## 简介

def info_item(self, response):

item = response.meta['item']

infodata = response.xpath("//div[@class='detail-base']/p/text()").extract()

if response.meta['type'] == 'intro': ##简介类型

item['intro'] = infodata

else:

item['base'] = infodata

return item

管道Pipeline 源码

def process_item(self, item, spider):

data = dict(item)

imgurl = data['imgurl']

base = data['base']

intro = data['intro']

userid = data['userid']

name = data['name']

sex = data['sex']

area = data['area']

xingzuo = data['xingzuo'] ##等于空

birthday = data['birthday'] ##等于空

height = data['height'] ##等于空

pic = data['pic']

profession = data['profession']

try:

onlysql = " select * from tw_cms_article_star where userid ='%s'" % data['userid'] # 查重复id

# 执行sql语句

self.cur.execute(onlysql)

# 是否有重复数据

repetition = self.cur.fetchone()

# 重复

if repetition is not None:

# 结果返回,已存在,则不插入

pass

else:

self.cur.execute("""insert into tw_cms_article_star (name,sex,area,xingzuo,birthday,height,pic,userid,intro,base,profession,imgurl)

values(%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s) """ % [name,sex,area,xingzuo,birthday,height,pic,userid,intro,base,profession,imgurl])

#self.cur.execute(insersql)

# 提交sql语句

self.mydb.commit()

self.cur.close()

except Exception as error:

# 出现错误时打印错误日志

logging.error(error)

# 发生错误回滚

self.mydb.rollback()

self.mydb.close()

imgurl = data['imgurl']

base = data['base']

intro = data['intro']

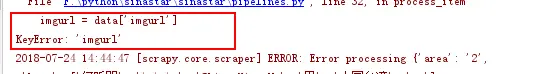

这三个变量,由于是初始抓取页面分配到的参数,再抓下一层分配的数据

实现的目的:把初始页面抓到的数据+多个页面的数据,集合在一起,一次性入库。

问题描述:现在就是初始抓到数据,print后有三个数组,数据是初始页面的,还有其它页面,这样就导致第一次imgurl,base,intro都会不存在,keyerror,尝试判断不存在,还是一直报错,导致入库一直失败

求更好的解决方法~

版权声明:本文内容由阿里云实名注册用户自发贡献,版权归原作者所有,阿里云开发者社区不拥有其著作权,亦不承担相应法律责任。具体规则请查看《阿里云开发者社区用户服务协议》和《阿里云开发者社区知识产权保护指引》。如果您发现本社区中有涉嫌抄袭的内容,填写侵权投诉表单进行举报,一经查实,本社区将立刻删除涉嫌侵权内容。