目录

《Graph Neural Networks: A Review of Methods and Applications》翻译与解读

2.2 Variants of Graph Neural Networks

《Graph Neural Networks: A Review of Methods and Applications》翻译与解读

Jie Zhou∗ , Ganqu Cui∗ , Zhengyan Zhang∗ , Cheng Yang, Zhiyuan Liu, Lifeng Wang, Changcheng Li, Maosong Sun

原论文地址:https://static.aminer.cn/upload/pdf/1248/1346/1079/5ede0553e06a4c1b26a83e27_0.pdf

Abstract

| Lots of learning tasks require dealing with graph data which contains rich relation information among elements. Modeling physics system, learning molecular fingerprints, predicting protein interface, and classifying diseases require a model to learn from graph inputs. In other domains such as learning from non-structural data like texts and images, reasoning on extracted structures, like the dependency tree of sentences and the scene graph of images, is an important research topic which also needs graph reasoning models. Graph neural networks (GNNs) are connectionist models that capture the dependence of graphs via message passing between the nodes of graphs. Unlike standard neural networks, graph neural networks retain a state that can represent information from its neighborhood with arbitrary depth. Although the primitive GNNs have been found difficult to train for a fixed point, recent advances in network architectures, optimization techniques, and parallel computation have enabled successful learning with them. In recent years, systems based on variants of graph neural networks such as graph convolutional network (GCN), graph attention network (GAT), gated graph neural network (GGNN) have demonstrated ground-breaking performance on many tasks mentioned above. In this survey, we provide a detailed review over existing graph neural network models, systematically categorize the applications, and propose four open problems for future research. | 大量的学习任务需要处理包含丰富元素间关系信息的图数据。物理系统建模、分子指纹学习、蛋白质界面预测、疾病分类等都需要通过图形输入进行学习的模型。在文本、图像等非结构数据的学习中,对提取出来的结构进行推理,如句子的依赖树和图像的场景图,是一个重要的研究课题,也需要图推理模型。图神经网络(GNNs)是一种连接主义模型,它通过图节点之间的消息传递来捕获图的依赖性。与标准的神经网络不同,图神经网络保留了一种状态,可以表示来自其任意深度的邻域的信息。虽然原始的gnn很难训练为定点,但最近在网络架构、优化技术和并行计算方面的进展已经使它们能够成功地学习。近年来,基于图卷积网络(GCN)、图注意力网络(GAT)、门控图神经网络(GGNN)等图神经网络变种的系统在上述许多任务上表现出了突破性的性能。在本研究中,我们对现有的图神经网络模型进行了详细的回顾,系统地对其应用进行了分类,并提出了四个有待进一步研究的问题。 |

1 INTRODUCTION

| Graphs are a kind of data structure which models a set of objects (nodes) and their relationships (edges). Recently, researches of analyzing graphs with machine learning have been receiving more and more attention because of the great expressive power of graphs, i.e. graphs can be used as denotation of a large number of systems across various areas including social science (social networks) [1], [2], natural science (physical systems [3], [4] and protein-protein interaction networks [5]), knowledge graphs [6] and many other research areas [7]. As a unique non-Euclidean data structure for machine learning, graph analysis focuses on node classification, link prediction, and clustering. Graph neural networks (GNNs) are deep learning based methods that operate on graph domain. Due to its convincing performance and high interpretability, GNN has been a widely applied graph analysis method recently. In the following paragraphs, we will illustrate the fundamental motivations of graph neural networks. | |

The first motivation of GNNs roots in convolutional neural networks (CNNs) [8]. CNNs have the ability to extract multi-scale localized spatial features and compose them to construct highly expressive representations, which led to breakthroughs in almost all machine learning areas and started the new era of deep learning [9]. As we are going deeper into CNNs and graphs, we found the keys of CNNs: local connection, shared weights and the use of multi-layer [9]. These are also of great importance in solving problems of graph domain, because 1) graphs are the most typical locally connected structure. 2) shared weights reduce the computational cost compared with traditional spectral graph theory [10]. 3) multi-layer structure is the key to deal with hierarchical patterns, which captures the features of various sizes. However, CNNs can only operate on regular Euclidean data like images (2D grid) and text (1D sequence) while these data structures can be regarded as instances of graphs. Therefore, it is straightforward to think of finding the generalization of CNNs to graphs. As shown in Fig. 1, it is hard to define localized convolutional filters and pooling operators, which hinders the transformation of CNN from Euclidean domain to non-Euclidean domain. |

|

The other motivation comes from graph embedding [11]– [15], which learns to represent graph nodes, edges or subgraphs in low-dimensional vectors. In the field of graph analysis, traditional machine learning approaches usually rely on hand engineered features and are limited by its inflexibility and high cost. Following the idea of representation learning and the success of word embedding [16], DeepWalk [17], which is regarded as the first graph embedding method based on representation learning, applies SkipGram model [16] on the generated random walks. Similar approaches such as node2vec [18], LINE [19] and TADW [20] also achieved breakthroughs. However, these methods suffer two severe drawbacks [12]. First, no parameters are shared between nodes in the encoder, which leads to computationally inefficiency, since it means the number of parameters grows linearly with the number of nodes. Second, the direct embedding methods lack the ability of generalization, which means they cannot deal with dynamic graphs or generalize to new graphs. Based on CNNs and graph embedding, graph neural networks (GNNs) are proposed to collectively aggregate information from graph structure. Thus they can model input and/or output consisting of elements and their dependency. Further, graph neural network can simultaneously model the diffusion process on the graph with the RNN kernel. |

|

In the following part, we explain the fundamental reasons why graph neural networks are worth investigating. Firstly, the standard neural networks like CNNs and RNNs cannot handle the graph input properly in that they stack the feature of nodes by a specific order. However, there isn’t a natural order of nodes in the graph. To present a graph completely, we should traverse all the possible orders as the input of the model like CNNs and RNNs, which is very redundant when computing. To solve this problem, GNNs propagate on each node respectively, ignoring the input order of nodes. In other words, the output of GNNs is invariant for the input order of nodes. Secondly, an edge in a graph represents the information of dependency between two nodes. In the standard neural networks, the dependency information is just regarded as the feature of nodes. However, GNNs can do propagation guided by the graph structure instead of using it as part of features. Generally, GNNs update the hidden state of nodes by a weighted sum of the states of their neighborhood. Thirdly, reasoning is a very important research topic for high-level artificial intelligence and the reasoning process in human brain is almost based on the graph which is extracted from daily experience. The standard neural networks have shown the ability to generate synthetic images and documents by learning the distribution of data while they still cannot learn the reasoning graph from large experimental data. However, GNNs explore to generate the graph from non-structural data like scene pictures and story documents, which can be a powerful neural model for further high-level AI. Recently, it has been proved that an untrained GNN with a simple architecture also perform well [21]. There exist several comprehensive reviews on graph neural networks. [22] proposed a unified framework, MoNet, to generalize CNN architectures to non-Euclidean domains (graphs and manifolds) and the framework could generalize several spectral methods on graphs [2], [23] as well as some models on manifolds [24], [25]. [26] provides a thorough review of geometric deep learning, which presents its problems, difficulties, solutions, applications and future directions. [22] and [26] focus on generalizing convolutions to graphs or manifolds, however in this paper we only focus on problems defined on graphs and we also investigate other mechanisms used in graph neural networks such as gate mechanism, attention mechanism and skip connection. [27] proposed the message passing neural network (MPNN) which could generalize several graph neural network and graph convolutional network approaches. [28] proposed the non-local neural network (NLNN) which unifies several “self-attention”-style methods. However, the model is not explicitly defined on graphs in the original paper. Focusing on specific application domains, [27] and [28] only give examples of how to generalize other models using their framework and they do not provide a review over other graph neural network models. [29] provides a review over graph attention models. [30] proposed the graph network (GN) framework which has a strong capability to generalize other models. However, the graph network model is highly abstract and [30] only gives a rough classification of the applications. |

|

[31] and [32] are the most up-to-date survey papers on GNNs and they mainly focus on models of GNN. [32] categorizes GNNs into five groups: graph convolutional networks, graph attention networks, graph auto-encoders, graph generative networks and graph spatial-temporal networks. Our paper has a different taxonomy with [32]. We introduce graph convolutional networks and graph attention networks in Section 2.2.2 as they contribute to the propagation step. We present the graph spatial-temporal networks in Section 2.2.1 as the models are usually used on dynamic graphs. We introduce graph auto-encoders in Sec 2.2.3 as they are trained in an unsupervised fashion. And finally, we introduce graph generative networks in applications of graph generation (see Section 3.3.1).

The rest of this survey is organized as follows. In Sec. 2, we introduce various models in the graph neural network family. We first introduce the original framework and its limitations. Then we present its variants that try to release the limitations. And finally, we introduce several general frameworks proposed recently. In Sec. 3, we will introduce several major applications of graph neural networks applied to structural scenarios, non-structural scenarios and other scenarios. In Sec. 4, we propose four open problems of graph neural networks as well as several future research directions. And finally, we conclude the survey in Sec. 5. |

2 MODELS

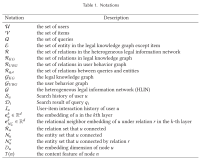

Graph neural networks are useful tools on non-Euclidean structures and there are various methods proposed in the literature trying to improve the model’s capability. In Sec 2.1, we describe the original graph neural networks proposed in [33]. We also list the limitations of the original GNN in representation capability and training efficiency. In Sec 2.2 we introduce several variants of graph neural networks aiming to release the limitations. These variants operate on graphs with different types, utilize different propagation functions and advanced training methods. In Sec 2.3 we present three general frameworks which could generalize and extend several lines of work. In detail, the message passing neural network (MPNN) [27] unifies various graph neural network and graph convolutional network approaches; the non-local neural network (NLNN) [28] unifies several “self-attention”-style methods. And the graph network(GN) [30] could generalize almost every graph neural network variants mentioned in this paper. Before going further into different sections, we give the notations that will be used throughout the paper. The detailed descriptions of the notations could be found in Table 1. |

2.1 Graph Neural Networks

The concept of graph neural network (GNN) was first proposed in [33], which extended existing neural networks for processing the data represented in graph domains. In a graph, each node is naturally defined by its features and the related nodes. The target of GNN is to learn a state embedding hv ∈ R s which contains the information of neighborhood for each node. The state embedding hv is an s-dimension vector of node v and can be used to produce an output ov such as the node label. Let f be a parametric function, called local transition function, that is shared among all nodes and updates the node state according to the input neighborhood. And let g be the local output function that describes how the output is produced. Then, hv and ov are defined as follows: |

|

| Limitations Though experimental results showed that GNN is a powerful architecture for modeling structural data, there are still several limitations of the original GNN. Firstly, it is inefficient to update the hidden states of nodes iteratively for the fixed point. If relaxing the assumption of the fixed point, we can design a multi-layer GNN to get a stable representation of node and its neighborhood. Secondly, GNN uses the same parameters in the iteration while most popular neural networks use different parameters in different layers, which serve as a hierarchical feature extraction method. Moreover, the update of node hidden states is a sequential process which can benefit from the RNN kernel like GRU and LSTM. Thirdly, there are also some informative features on the edges which cannot be effectively modeled in the original GNN. For example, the edges in the knowledge graph have the type of relations and the message propagation through different edges should be different according to their types. Besides, how to learn the hidden states of edges is also an important problem. Lastly, it is unsuitable to use the fixed points if we focus on the representation of nodes instead of graphs because the distribution of representation in the fixed point will be much smooth in value and less informative for distinguishing each node. |

2.2 Variants of Graph Neural Networks

| In this subsection, we present several variants of graph neural networks. Sec 2.2.1 focuses on variants operating on different graph types. These variants extend the representation capability of the original model. Sec 2.2.2 lists several modifications (convolution, gate mechanism, attention mechanism and skip connection) on the propagation step and these models could learn representations with higher quality. Sec 2.2.3 describes variants using advanced training methods which improve the training efficiency. An overview of different variants of graph neural networks could be found in Fig. 2. |

2.2.1 Graph Types

In the original GNN [33], the input graph consists of nodes with label information and undirected edges, which is the simplest graph format. However, there are many variants of graphs in the world. In this subsection, we will introduce some methods designed to model different kinds of graphs. Directed Graphs The first variant of graph is directed graph. Undirected edge which can be treated as two directed edges shows that there is a relation between two nodes. However, directed edges can bring more information than undirected edges. For example, in a knowledge graph where the edge starts from the head entity and ends at the tail entity, the head entity is the parent class of the tail entity, which suggests we should treat the information propagation process from parent classes and child classes differently. DGP [35] uses two kinds of weight matrix, Wp and Wc, to incorporate more precise structural information. The propagation rule is shown as follows: |

|

Heterogeneous Graphs The second variant of graph is heterogeneous graph, where there are several kinds of nodes. The simplest way to process heterogeneous graph is to convert the type of each node to a one-hot feature vector which is concatenated with the original feature. What’s more, GraphInception [36] introduces the concept of metapath into the propagation on the heterogeneous graph. With metapath, we can group the neighbors according to their node types and distances. For each neighbor group, GraphInception treats it as a sub-graph in a homogeneous graph to do propagation and concatenates the propagation results from different homogeneous graphs to do a collective node representation. Recently, [37] proposed the heterogeneous graph attention network (HAN) which utilizes nodelevel and semantic-level attentions. And the model have the ability to consider node importance and meta-paths simultaneously Graphs with Edge Information In another variant of graph, each edge has additional information like the weight or the type of the edge. We list two ways to handle this kind of graphs: Firstly, we can convert the graph to a bipartite graph where the original edges also become nodes and one original edge is split into two new edges which means there are two new edges between the edge node and begin/end nodes. The encoder of G2S [38] uses the following aggregation function for neighbors: |

|

| Dynamic Graphs Another variant of graph is dynamic graph, which has static graph structure and dynamic input signals. To capture both kind of information, DCRNN [40] and STGCN [41] first collect spatial information by GNNs, then feed the outputs into a sequence model like sequence-to-sequence model or CNNs. Differently, Structural-RNN [42] and ST-GCN [43] collect spatial and temporal messages at the same time. They extend static graph structure with temporal connections so they can apply traditional GNNs on the extended graphs. |

2.2.2 Propagation Types

The propagation step and output step are of vital importance in the model to obtain the hidden states of nodes (or edges). As we list below, there are several major modifications in the propagation step from the original graph neural network model while researchers usually follow a simple feed-forward neural network setting in the output step. The comparison of different variants of GNN could be found in Table 2. The variants utilize different aggregators to gather information from each node’s neighbors and specific updaters to update nodes’ hidden states. Convolution. There is an increasing interest in generalizing convolutions to the graph domain. Advances in this direction are often categorized as spectral approaches and non-spectral (spatial) approaches. Spectral approaches work with a spectral representation of the graphs. Spectral Network. [45] proposed the spectral network. The convolution operation is defined in the Fourier domain by computing the eigendecomposition of the graph Laplacian. The operation can be defined as the multiplication of a signal x ∈ R N (a scalar for each node) with a filter gθ =diag(θ) parameterized by θ ∈ R N : |

|

All of these models use the original graph structure to denote relations between nodes. However, there may have implicit relations between different nodes and the Adaptive Graph Convolution Network (AGCN) is proposed to learn the underlying relations [49]. AGCN learns a “residual” graph Laplacian and add it to the original Laplacian matrix. As a result, it is proven to be effective in several graph structured datasets. What’s more, [50] presents a Gaussian process-based Bayesian approach (GGP) to solve the semi-supervised learning problems. It shows parallels between the model and the spectral filtering approaches, which could give us some insights from another perspective. However, in all of the spectral approaches mentioned above, the learned filters depend on the Laplacian eigenbasis, which depends on the graph structure, that is, a model trained on a specific structure could not be directly applied to a graph with a different structure. Non-spectral approaches define convolutions directly on the graph, operating on spatially close neighbors. The major challenge of non-spectral approaches is defining the convolution operation with differently sized neighborhoods and maintaining the local invariance of CNNs. |

|

DGCN. [52] proposed the dual graph convolutional network (DGCN) to jointly consider the local consistency and global consistency on graphs. It uses two convolutional networks to capture the local/global consistency and adopts an unsupervised loss to ensemble them. The first convolutional network is the same as Eq. 14. And the second network replaces the adjacency matrix with positive pointwise mutual information (PPMI) matrix:

However, [1] does not utilize the full set of neighbors in Eq.19 but a fixed-size set of neighbors by uniformly sampling. And [1] suggests three aggregator functions.

. Recently, the structure-aware convolution and StructureAware Convolutional Neural Networks (SACNNs) have e been proposed [56]. Univariate functions are used to perform as filters and they can deal with both Euclidean and non-Euclidean structured data. . Gate. There are several works attempting to use the gate mechanism like GRU [57] or LSTM [58] in the propagation step to diminish the restrictions in the former GNN models and improve the long-term propagation of information across the graph structure. [59] proposed the gated graph neural network (GGNN) which uses the Gate Recurrent Units (GRU) in the propagation step, unrolls the recurrence for a fixed number of steps T and uses backpropagation through time in order to compute gradiens. |

|

hft v The node v first aggregates message from its neighbors, where Av is the sub-matrix of the graph adjacency matrix A and denotes the connection of node v with its neighbors. The GRU-like update functions incorporate information from the other nodes and from the previous timestep to update each node’s hidden state. a gathers the neighborhood information of node v, z and r are the update and reset gates. LSTMs are also used in a similar way as GRU through the propagation process based on a tree or a graph.

|

|

The introduction of separate parameter matrices for each child k allows the model to learn more fine-grained representations conditioning on the states of a unit’s children than the Child-Sum Tree-LSTM. The two types of Tree-LSTMs can be easily adapted to the graph. The graph-structured LSTM in [61] is an example of the N-ary Tree-LSTM applied to the graph. However, it is a simplified version since each node in the graph has at most 2 incoming edges (from its parent and sibling predecessor). [44] proposed another variant of the Graph LSTM based on the relation extraction task. The main difference between graphs and trees is that edges of graphs have their labels. And [44] utilizes different weight matrices to represent different labels. [62] proposed the Sentence LSTM (S-LSTM) for improving text encoding. It converts text into a graph and utilizes the Graph LSTM to learn the representation. The S-LSTM shows strong representation power in many NLP problems. [63] proposed a Graph LSTM network to address the semantic object parsing task. It uses the confidencedriven scheme to adaptively select the starting node and determine the node updating sequence. It follows the same idea of generalizing the existing LSTMs into the graphstructured data but has a specific updating sequence while methods we mentioned above are agnostic to the order of nodes. Attention. The attention mechanism has been successfully used in many sequence-based tasks such as machine translation [64]–[66], machine reading [67] and so on. [68] proposed a graph attention network (GAT) which incorporates the attention mechanism into the propagation step. It computes the hidden states of each node by attending over its neighbors, following a self-attention strategy. [68] defines a single graph attentional layer and constructs arbitrary graph attention networks by stacking this layer. The layer computes the coefficients in the attention mechanism of the node pair (i, j) by: Moreover, the layer utilizes the multi-head attention similarly to [66] to stabilize the learning process. It applies K independent attention mechanisms to compute the hidden states and then concatenates their features(or computes the average), resulting in the following two output representations The attention architecture in [68] has several properties: (1) the computation of the node-neighbor pairs is parallelizable thus the operation is efficient; (2) it can be applied to graph nodes with different degrees by specifying arbitrary weights to neighbors; (3) it can be applied to the inductive learning problems easily. Besides GAT, Gated Attention Network (GAAN) [69] also uses the multi-head attention mechanism. However, it uses a self-attention mechanism to gather information from different heads to replace the average operation of GAT. Skip connection. Many applications unroll or stack the graph neural network layer aiming to achieve better results as more layers (i.e k layers) make each node aggregate more information from neighbors k hops away. However, it has been observed in many experiments that deeper models could not improve the performance and deeper models could even perform worse [2]. This is mainly because more layers could also propagate the noisy information from an exponentially increasing number of expanded neighborhood members. A straightforward method to address the problem, the residual network [70], could be found from the computer vision community. But, even with residual connections, GCNs with more layers do not perform as well as the 2- layer GCN on many datasets [2]. [71] proposed a Highway GCN which uses layer-wise gates similar to highway networks [72]. The output of a layer is summed with its input with gating weights: |

|

[74] studies properties and resulting limitations of neighborhood aggregation schemes. It proposed the Jump Knowledge Network which could learn adaptive, structureaware representations. The Jump Knowledge Network selects from all of the intermediate representations (which ”jump” to the last layer) for each node at the last layer, which makes the model adapt the effective neighborhood size for each node as needed. [74] uses three approaches of concatenation, max-pooling and LSTM-attention in the experiments to aggregate information. The Jump Knowledge Network performs well on the experiments in social, bioinformatics and citation networks. It could also be combined with models like Graph Convolutional Networks, GraphSAGE and Graph Attention Networks to improve their performance. Hierarchical Pooling. In the area of computer vision, a convolutional layer is usually followed by a pooling layer to get more general features. Similar to these pooling layers, a lot of work focuses on designing hierarchical pooling layers on graphs. Complicated and large-scale graphs usually carry rich hierarchical structures which are of great importance for node-level and graph-level classification tasks. To explore such inner features, Edge-Conditioned Convolution (ECC) [75] designs its pooling module with recursively downsampling operation. The downsampling method is based on splitting the graph into two components by the sign of the largest eigenvector of the Laplacian. DIFFPOOL [76] proposed a learnable hierarchical clustering module by training an assignment matrix in each layer: S (l) = sof tmax(GNNl,pool(A (l) , X(l) )) (31) where X(l) is node features and A(l) is coarsened adjacency matrix of layer l |

2.2.3 Training Methods

The original graph convolutional neural network has several drawbacks in training and optimization methods. Specifically, GCN requires the full graph Laplacian, which is computational-consuming for large graphs. Furthermore, The embedding of a node at layer L is computed recursively by the embeddings of all its neighbors at layer L − 1. Therefore, the receptive field of a single node grows exponentially with respect to the number of layers, so computing gradient for a single node costs a lot. Finally, GCN is trained independently for a fixed graph, which lacks the ability for inductive learning. Sampling. GraphSAGE [1] is a comprehensive improvement of original GCN. To solve the problems mentioned above, GraphSAGE replaced full graph Laplacian with learnable aggregation functions, which are key to perform message passing and generalize to unseen nodes. As shown in Eq.19, they first aggregate neighborhood embeddings, concatenate with target node’s embedding, then propagate to the next layer. With learned aggregation and propagation functions, GraphSAGE could generate embeddings for unseen nodes. Also, GraphSAGE uses neighbor sampling to alleviate receptive field expansion. PinSage [77] proposed importance-based sampling method. By simulating random walks starting from target nodes, this approach chooses the top T nodes with the highest normalized visit counts. FastGCN [78] further improves the sampling algorithm. Instead of sampling neighbors for each node, FastGCN directly samples the receptive field for each layer. FastGCN uses importance sampling, which the importance factor is calculated as below: In contrast to fixed sampling methods above, [79] introduces a parameterized and trainable sampler to perform layer-wise sampling conditioned on the former layer. Furthermore, this adaptive sampler could find optimal sampling importance and reduce variance simultaneously. Following reinforcement learning, SSE [80] proposed Stochastic Fixed-Point Gradient Descent for GNN training. This method views embedding update as value function and parameter update as value function. While training, the algorithm will sample nodes to update embeddings and sample labeled nodes to update parameters alternately. Receptive Field Control. [81] proposed a control-variate based stochastic approximation algorithms for GCN by utilizing the historical activations of nodes as a control variate. This method limits the receptive field in the 1- hop neighborhood, but use the historical hidden state as an affordable approximation. |

|

Data Augmentation. [82] focused on the limitations of GCN, which include that GCN requires many additional labeled data for validation and also suffers from the localized nature of the convolutional filter. To solve the limitations, the authors proposed Co-Training GCN and Self-Training GCN to enlarge the training dataset. The former method finds the nearest neighbors of training data while the latter one follows a boosting-like way. Unsupervised Training. GNNs are typically used for supervised or semi-supervised learning problems. Recently, there has been a trend to extend auto-encoder (AE) to graph domains. Graph auto-encoders aim at representing nodes into low-dimensional vectors by an unsupervised training manner. Graph Auto-Encoder (GAE) [83] first uses GCNs to encode nodes in the graph. Then it uses a simple decoder to reconstruct the adjacency matrix and computes the loss from the similarity between the original adjacency matrix and the reconstructed matrix. |

|

| [83] also trains the GAE model in a variational manner and the model is named as the variational graph autoencoder (VGAE). Furthermore, Berg et al. use GAE in recommender systems and have proposed the graph convolutional matrix completion model (GC-MC) [84], which outperforms other baseline models on the MovieLens dataset. Adversarially Regularized Graph Auto-encoder (ARGA) [85] employs generative adversarial networks (GANs) to regularize a GCN-based graph auto-encoder to follow a prior distribution. There are also several graph auto-encoders such as NetRA [86], DNGR [87], SDNE [88] and DRNE [89], however, they don’t use GNNs in their framework. |

2.3 General Frameworks

| Apart from different variants of graph neural networks, several general frameworks are proposed aiming to integrate different models into one single framework. [27] proposed the message passing neural network (MPNN), which unified various graph neural network and graph convolutional network approaches. [28] proposed the non-local neural network (NLNN). It unifies several “self-attention”-style methods [66], [68], [90]. [30] proposed the graph network (GN) which unified the MPNN and NLNN methods as well as many other variants like Interaction Networks [4], [91], Neural Physics Engine [92], CommNet [93], structure2vec [7], [94], GGNN [59], Relation Network [95], [96], Deep Sets [97] and Point Net [98]. | |